Loiana Leal, Lecturer in Modern Languages, School of Modern Languages

Digital Exams Team, Learning Enhancement and Technology

Faculty of Humanities and Social Sciences and Learning and Teaching Development Service

What did you do?

Used Inspera for digital assessment via digital examinations in a language module for formative and summative assessments.

Context

The Module Leader (ML), Loiana Leal, took part in a focus group in which a new platform for digital assessment for Newcastle University was chosen (2020-2021). In the 2021-22 academic year, ‘POR2010 – Level B Portuguese HE Intermediate’ started a 3-year trial of Inspera for digital assessment. The aim was to have an assessment that would reflect the strong digital profile of the module; arising from a student co-creation project for POR2010 curriculum redesign.

The Digital Exams Team fully supported the ML by delivering tailored training for exam design, use of marking features and feedback release via the Virtual Learning Environment (Canvas), as well as to roll out the exams. The Digital Exams Team consistently provided information to support every step to prepare for the exam, keeping ML and POR2010 students up to date.

By the end of the 3-year trial, teams involved should be able to have identified the advantages of using Inspera in language exams as well as the challenges to perfect processes, increase its reliability and better student experience.

Who is involved?

Loiana Leal (POR2010 ML) and the LTDS Digital Exams Team worked together to support the POR2010 Inspera digital exams.

In Y1 the Digital Exams Team fully supported the ML to get to know the system and learn how to interact with Inspera. In Y2 and Y3 they offered outstanding support to ML to ensure student experience during exams would be excellent. The Team was extremely responsive to ML and students’ needs, going beyond the expected to ensure a high-quality experience.

Students in the module were involved in various stages of the development of the trail by giving feedback after formative and summative assessment delivered via Inspera as well as suggesting adjustments to assessment design.

Why did you do it?

After tailoring the POR2010 module for flexible learning, Loiana aimed to further enhance the digital profile the module had developed over the lockdown years. ML recognized a chance to invite students to collaborate in module design as consultants, assessing their own curriculum and suggesting changes. In focus groups, students identified the inconsistency of having a written paper at the end of the academic year while all their other assessments components were digital. Loiana introduced Inspera as a platform for their module assessment, explaining that it was new and not previously used in SML. Students discussed this prospect in groups and with their representative. They welcomed the use of Inspera in a BYOD (Bring Your Own Device) format. The ML outlined a three-year trial to assess the reliability of the platform for the module’s assessment strategy, including formative and summative tasks, as well as Listening, Reading, and Writing assessments.

Exploring Inspera capabilities for language modules assessment allowed the ML to invest in innovation for POR2010. It emerged within an embryo project of curriculum co-creation, not only welcoming students’ voice but incorporating their suggestions into their curriculum. Besides, it was also a chance to explore Inspera’s suitability for language assessment, as no other modules in SML had used Inspera before. The use of Inspera for summative assessments was hosted within SEB (Safe Exam Browser) ensuring a locked down exam, which could resolve issues appearing with the discontinuation of Sanako Study1200 in the Language Lab. Additionally, it was an excellent opportunity to work with the digital resources offered by Newcastle University, further investing in the deeper development of key learning skills pertained by the agenda of digital learning and lifelong learning already embedded into the module.

How did you do it?

Curriculum co-creation meets Inspera

In Y1, students’ were able to share ideas for activities for formative assessment in H5P and Inspera via student focus groups and workshops led by their ML. The Semester 2 curriculum was tailored in collaboration with students, and new learning activities and formative assessment were designed and introduced as the result of the workshops. One set of activities involved formative listening tests in Inspera. Students reported to feel more confident about what they were expected to perform in their final assessment, once they had the opportunity to think about the exam design, the learning outcomes, and the marking schemes. They also highlighted that having the opportunity to use Inspera for formative tasks was beneficial to their experience.

In Y3 (2023-24), students taking POR2010 benefited from a curriculum designed in collaboration with their peers in previous years.

In Y1 of the trial (2021-2022), students opted for a BYOD exam. Student feedback on the BYOD was extremely positive in terms of the Inspera interface and its features, for instance:

- exam navigation

- flagging questions

- anonymous marking tools

- feedback

- criteria and band tools

Having the opportunity to use their own devices was the biggest advantage highlighted in their feedback.

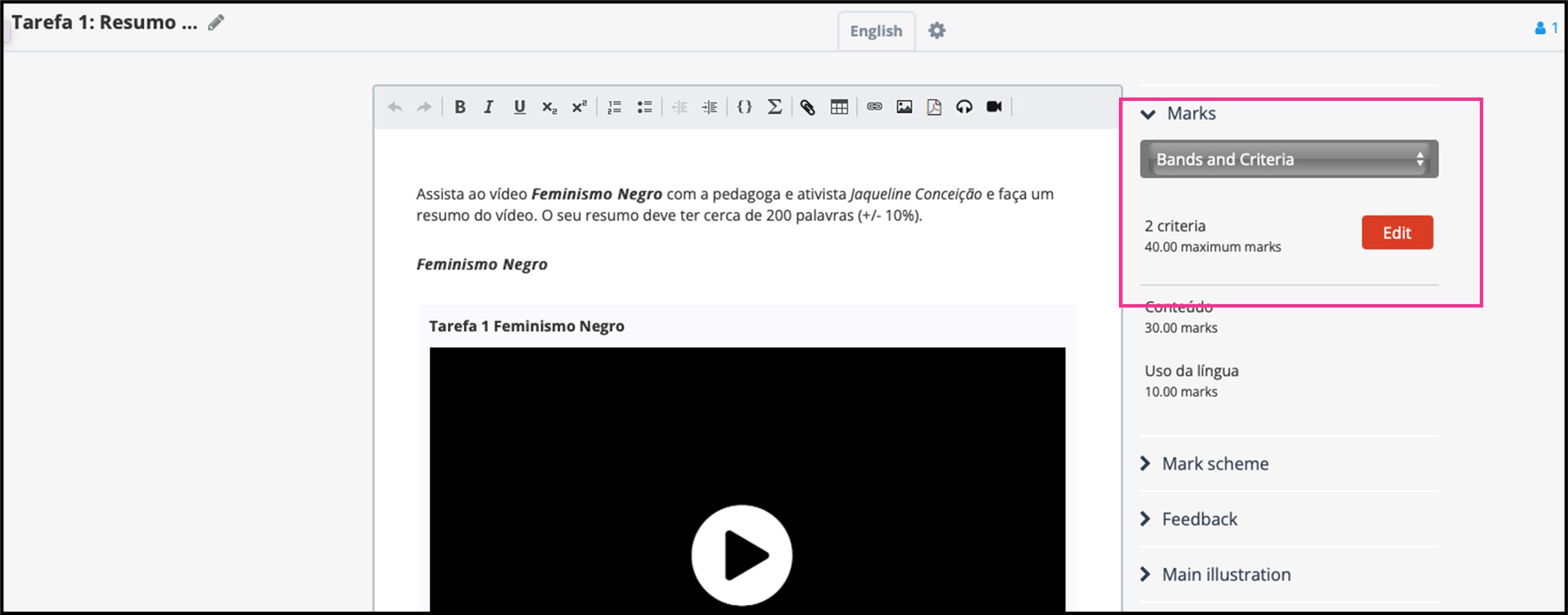

The Bands and Criteria tool in Inspera

A couple of issues were faced however, such as the poor wi-fi signal in some venues and system crashes in a few students’ devices. Reflecting on the lessons learned from this first experience allowed changes to be made ahead of the next lot of exams.

In Y2 we trialled a mix of BYOD and use of university PCs. University PCs were used for Listening Tests at the Old Library Building (OLB) Language Labs because of the nature of the exams including videos and the requirement of hardware such as headphones. This way we ensured all students had access to the same equipment, cutting any advantages in terms of hardware. BYOD were used for Essay type exams, but we still faced small issues with the specific language keyboards, as for instance students being unable to switch keyboards in their Windows laptops. This highlighted the need for more robust processes.

In Y3, exams were held at OLB Language Labs eliminating the need of exam instructions for different systems (Mac users .vs. Windows users). This way liaison between SML, Exams Office and Digital Exams Team created a robust process ensuring the validity, reliability and excellent student experience. Students were also given the choice to use Inspera built-in special-character keyboard and keyboard codes to input special characters. Feedback showed that students prefer to have options, and felt confident during their exam that troubleshooting was readily available if they needed it.

Authentic Assessment

Language exams require different approaches to assessment due to the nature of the subject. Students work with oral and video recordings for Oral and Aural practice and assessment.

Inspera allowed the ML to explore innovative ways to develop authentic assessment using videos. The easiness to set up a submission point on Canvas enabled the ML to create formative practical tests, having video and audio content that students could complete in class and at home. This supported students to feel acquainted with the Inspera interface and confident to explore the source materials using their own laptops as well as in desktop. It has also served as mock questions, preparing students for the Ad-Hoc Listening Test delivered in the Language labs in the OLB, and Inspera proved to be better for the delivery of such materials (in comparison with experience using other software available in our language labs), as it did not present any issues with buffering, delays, sound or video quality during practical sessions or exam delivery.

ML’s experience with Marking and Feedback features: an incredible benefit

By taking part in the focus groups and attending training sessions on Inspera, I identified that Inspera could speed up the marking process. Inspera offers a series of features for marking, assessing and providing feedback to students, as well as tools to facilitate the marking in modules with several markers. In every new software, users need to play with it to get used to the interface, which is intuitive and user friendly. The Digital Exams Team are extremely responsive via Teams and emails to support module teams. I had all my doubts resolved promptly and all suggestions and possibilities investigated. Inspera also offers a Help Centre and the internal website created by the Digital Exams Team offers comprehensive tutorials and instructional materials.

The Inspera Help Centre website

All marks and feedback are released via Canvas. The two platforms (Inspera and Canvas) are integrated allowing PS staff to release/pull marks via Canvas Gradebook, as well as enabling students to check their feedback without the need of feeding different systems and cutting out hard copies.

Student view of Inspera Feedback

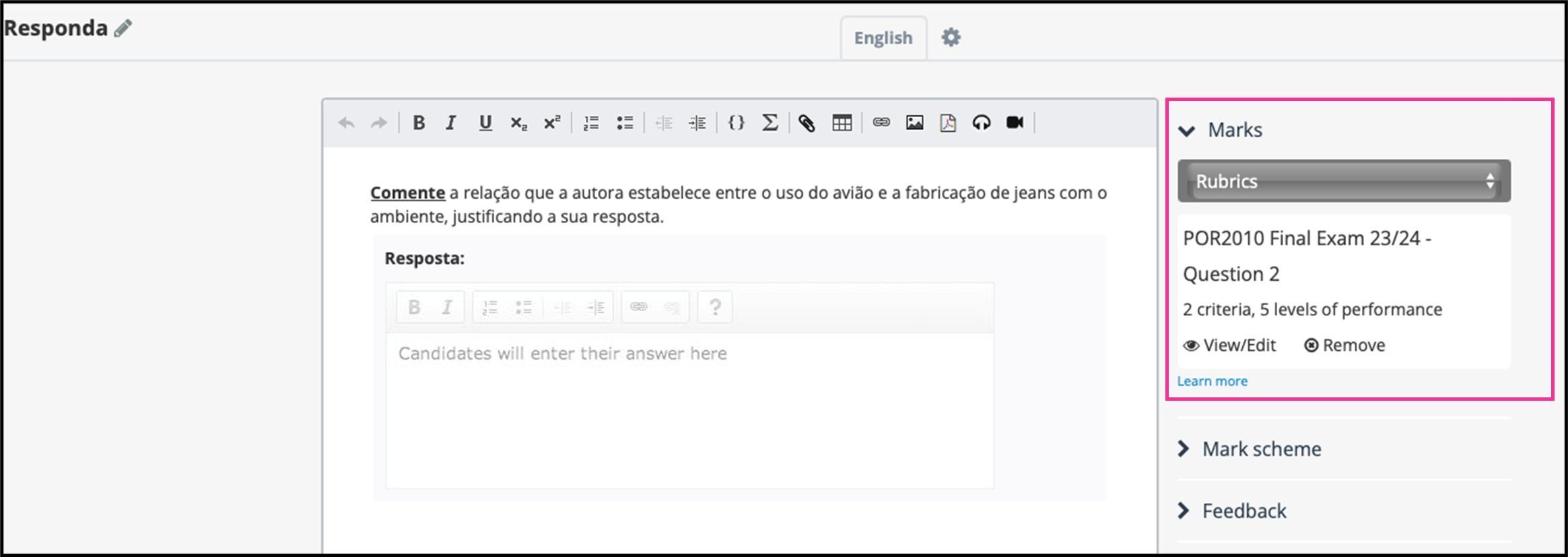

In my experience, the Rubrics feature in Inspera made the entire process even faster. I designed them in advance, attached them to each question, including the feedback. During marking I just needed to click on it to distribute marks accordingly. Inspera still allows further individual feedback for each question if markers find it necessary.

The Rubrics tool in Inspera

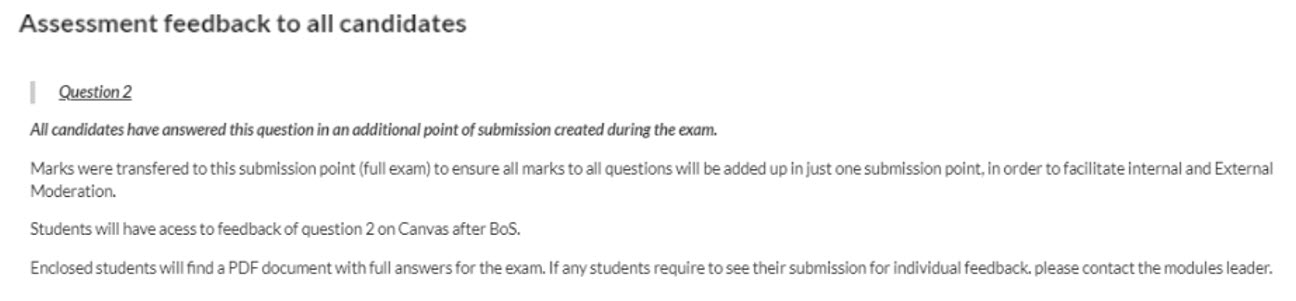

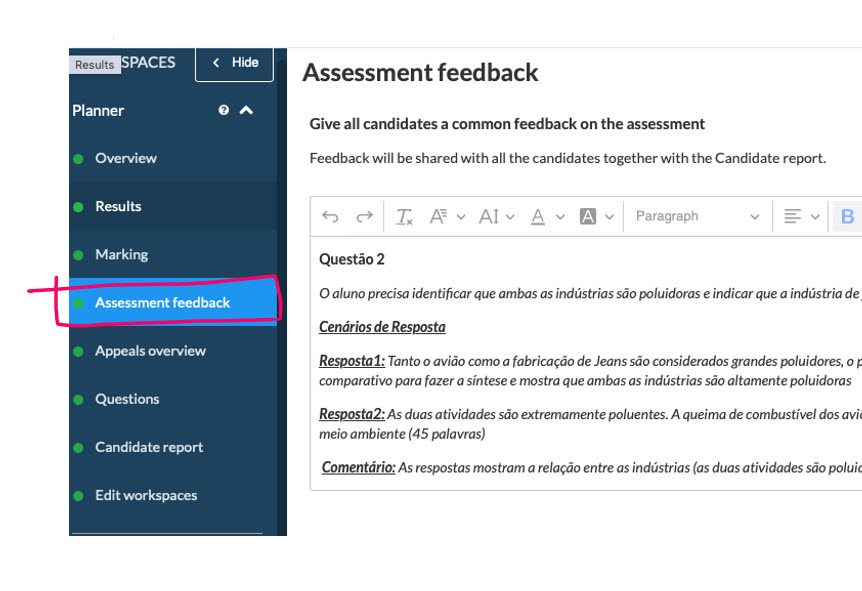

Another tool in ‘Assessment and Feedback’ allows for general feedback to be written for the entire exam. I specifically used this tool to write general feedback about the questions where most of the cohort did not perform as expected, giving more detail of what was expected as answers. That helped students to improve performance from Semester 1 to Semester 2 in such types of questions.

General Feedback Tool in Inspera

Does it work?

It works! The majority of students reported having had a valuable and enjoyable experience, they felt supported and prepared for the exam. Having formative, practice scenarios for students is beneficial. Further details are provided below under ‘Module Performance’.

From my point of view, having everything in the same hub, sped up the marking process by around 30-40%.

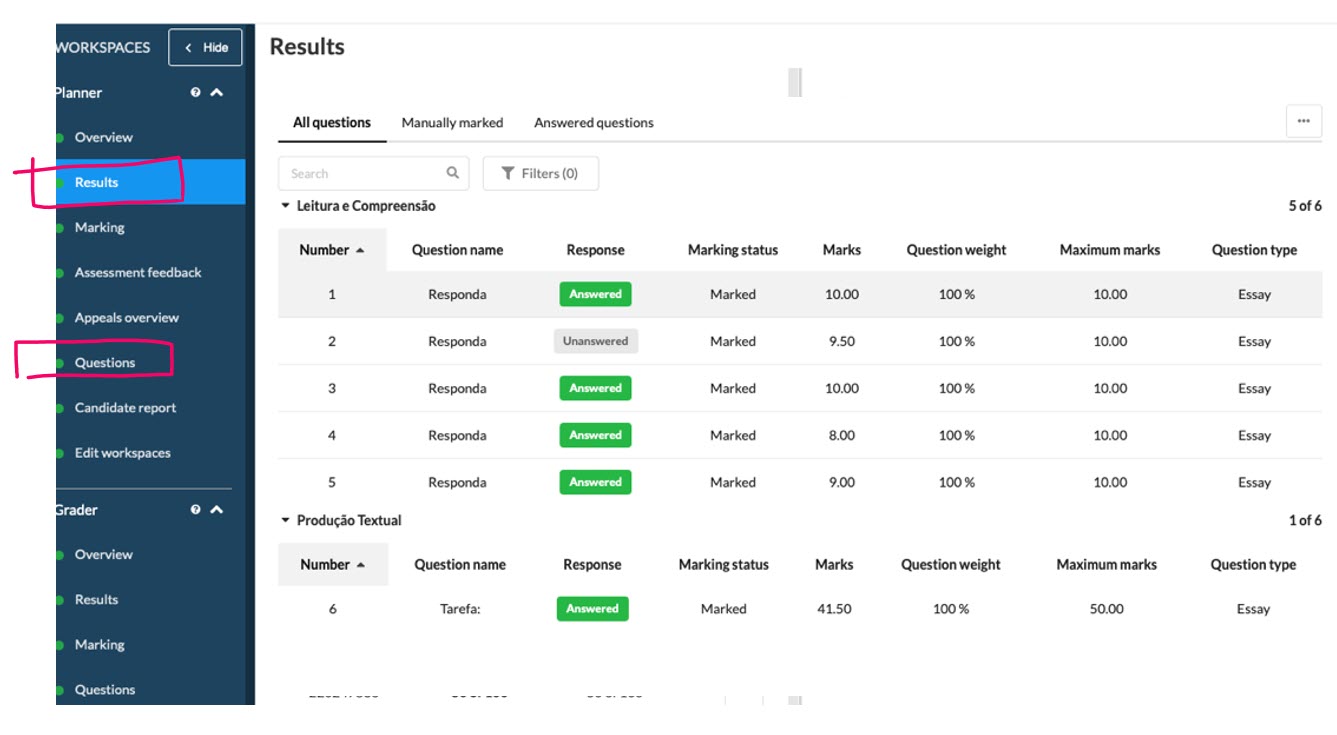

It was faster than running through papers, then checking through the questions again to identify the most common errors. Inspera allows me to flick through student exams by question, it gives me a panel where I can see the performance for each question and identify where the feedback needs to be more precise.

The Results Panel in Inspera showing the breakdown of question marks from a student record

Inspera Marking and Feedback tools (in Marking 2.0) are extremely efficient. The interface is user friendly and intuitive, but there is plenty of training delivered by the Digital Exams Team and information available online. There are other tools available for marking teams that I did not discuss. It is a system that helps to distribute exams for markers, offering several ways to have rubrics and instructions for marking available to our marking teams. The integration with Canvas is a great advantage for academics and PS teams to release feedback, results and to pull marks from Canvas.

Module Performance

At the start of the trial of Inspera, one of my biggest concerns was if, by using Inspera for summative assessment, students were required to bring their own devices (BYOD), I would detect a critical difference in student performance. Such concerns scaled up after Y1, when a few students had crashes, and had to finish their exams using a back-up paper. I compared student results with performance on a formative test in Inspera. That allowed me to infer if crashes critically affected performance, and my conclusion was that Y1 cohort, the strongest group I had in 12 years leading POR2010, performed as expected. Table 1 shows POR2010 cohort average performance in their final exam. There is no significant change in the trend.

| 2016-17 | 2017-18 | 2018-19 | 2019-20

24hs take home exams via Canvas

|

2020-21

24hs take home exams via Canvas |

2021-22 (Y1) Inspera BYOD |

2022-23 (Y2) Inspera BYOD + Uni Desktops |

2023-24 (Y3) Inspera Desktops |

|

| Highest Mark | 84 | 77 | 80 | 86 | 88 | 77 | 74 | 88 |

| Lowest Mark | 44 | 30 | 30 | 43 | 40 | 32 | 26 | 40 |

| Overall Performance average | 67 | 56 | 58 | 65 | 62 | 55 | 54 | 60 |

| Exact same format Reading, MCQ’s for reading and comprehension, 2 essay type questions for reading and comprehension, 1 grammar section (MCQ’s, Identifying, Matching, Analysing). 1 essay Type 300-350 words. | Same format, 4 essay type reading and comprehension, 1 direct grammar question (match columns), 1 Essay type question 300-350 words. No MCQ’s to avoid inflations of marks, as the exams were take-home exams. Focus on the critical and analytical skills. | Exact same format: Reading Text: 5 comprehending questions, 1 essay Type 300-350 words. No MCQ’s. Complete focus on critical-analytical skills. Grammar is assessed in use, by its application to build coherent texts, compare and summarize information/arguments portrayed by different genres (a poem and an image, for example) |

||||||

Table 1 – performance, final summative assessment: reading and writing

Undoubtedly, having a crash during a final exam adds stress, but the Exams Office and Digital Exams Team carefully trained invigilators to ensure that troubleshooting was in place, and that students would be given extra time to complete their exams in case of crashes. This is not to say this is the perfect experience, however processes were improved year after year, to minimize the event of a crash or a technical issue with the system.

By trialling a new system, we are shaping the future in terms of its reliability and suitability, we are also influencing the improvements and decision-making about better interface, developing sufficient infrastructure and IT solutions to ensure better experiences. I feel this is exactly what happened in this experience. I do recognise some students might have encountered challenges, but the adoption of Inspera was not imposed by me or the university; students were part of the process, they were entitled to make their own decisions and face the challenges along the way together with us.

In S1 2024-2025 during Semester 1 exams students completed summative assessment in Inspera, which entailed a 300-word output essay-style question. Students faced no issues during their exam, which highlights the processes developed and rolled out are now well adjusted to promote a positive experience during assessment.

Further Information or Useful Resources

- Why would you run a locked down exam? See: https://www.ncl.ac.uk/learning-and-teaching/digital-technologies/inspera/secure-browser/

- Do you want to know how to share feedback on Inspera? See: https://www.ncl.ac.uk/learning-and-teaching/digital-technologies/inspera/inspera-feedback-release/

- Would you like to know more about content features? See: https://www.ncl.ac.uk/learning-and-teaching/digital-technologies/inspera/content-creation-features/

- Are you a PS colleague supporting a module team with an Inspera exam? See: https://www.ncl.ac.uk/learning-and-teaching/digital-technologies/inspera/inspera-professional-services/