Dr David Grundy, Director of Digital Education

Newcastle University Business School

Faculty of Humanities and Social Sciences

What did you do?

I used Panopto (ReCap) Analytics to inform changes and alterations to my teaching approaches and activities for my Executive MBA classes. Due to the flexible learning approaches of 2020/21 a modules weekly pre-seminar content which students were to review to inform their understanding of a case study were all on the Panopto (ReCap) recording platform broken into bite-sized chunks in very much a flipped seminar approach. This allowed for me to review the Panopto (ReCap) analytics of the video recordings to understand exactly which students (see example below) had reviewed the content before the seminar and which had not. As this was a very small cohort (n=14) I was then able to re-work and tailor the content of the seminar’s examination of the case study to maximise both the usage of what students did know, or had engaged with, and best support areas which clearly hadn’t been engaged with but were key to know. The key aspects of this were the smaller chunking of the videos into multiple parts and the viewer engagement statistics.

Who is involved?

Dr David Grundy, Director of Digital Education

How did you do it?

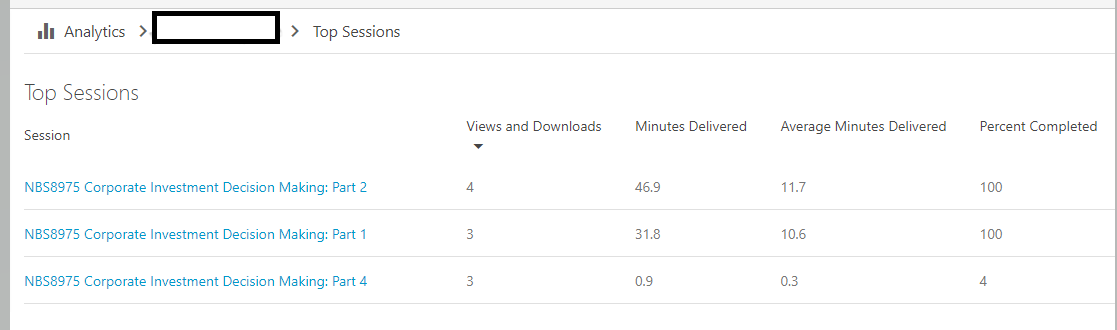

The Panopto (ReCap) Analytics functionality of the Panopto (ReCap) platform was used. This involved both using the Top Viewers/Users Screen for an “at a glance” analytics screen which gives a good overview, the individual student user level analytics and finally also using the downloadable Viewer Engagement stats using the Download Reports function at the bottom of the analytics page. The Top Viewers screen was useful for more immediate information on exactly which students had watched exactly how much of which videos and gave good “headlines” as to the general level of student engagement in the class.

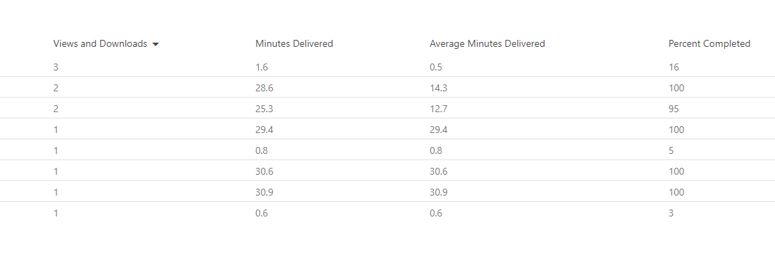

I then generally unpicked the individual student engagement in the Analytics page. This can be found by simply clicking the individual user and this gives you a powerful overview of exactly how much of a selection of videos have been watched by an individual student (see below). In the screenshot below for example I can see that the student has watched section 1 and 2 of that week’s delivery, hasn’t watched 3 at all, and barely touched part 4. This gives me a good understanding of the content covered by the student from the flipped content. With a very small cohort and enough prep time this allowed me then to reconfigure the seminar’s approach.

Finally, the viewer engagement download statistics create a heat map of content viewing. This was used to inform future pedagogic design choices at the module content review stage. By looking for commonalities in the data I was able for example to quickly identify common “break points” for users. A “break-point” is a point in the video in which the user either stops the video to later resume (they’re taking a break) or stops the video never to return. One of my more complex conceptual chunked lectures for example had a common “break-point” around 6 minutes in. I was able to identify that when I used a particularly complex theory at that point in the lecture, and so for future design this has now been flagged for me to review other approaches to these concepts or build in more digestible examples and so forth. Looking for commonalities in the data isn’t unfortunately something the Panopto (ReCap) platform will do automatically in the download and requires a little post-Excel manipulation, or with a small enough cohort size eyeballing the data yourself and drawing inferences to investigate.

Why did you do it?

The Executive MBA at NUBS is a small group of extremely high performing individuals who are very highly engaged but time poor. If they have not done the work for the session it is usually due to pressing real world issues within their business. As such I was determined to adapt and flex the sessions to maximise the value of the sessions to them as learners. This entailed a higher level of prep than say for a typical UG class, with in some cases me completely re-writing my seminar plans or design.

Does it work?

The interesting thing about re-writing and delivering an alternative seminar is that the students have no baseline of the original seminar to compare against! So student feedback, or even awareness, that I was re-writing the seminar approach and design to suit how they were digesting the module content wasn’t actually told to the students. However, what I did find was that attendance was extremely good (usually 12 out of 14), and indeed I had a couple of apology e-mails from the Executive MBA students saying that they were sorry to miss the sessions, one noting that they’d heard we’d had as a class some good discussions and was very sorry to miss them.

What I did find during the teaching was that the classroom conversation (on Teams) was very free flowing. We for a full hour never seemed to stop talking as a class. I feel this was because I was able to avoid areas where there were clear gaps in knowledge and avoid turning the session into a “mini-lecture” to resolve those gaps by using the case more actively to highlight learning points. Student feedback from the seminars was good and students engaged in positive learning behaviours after the seminars with the analytics showing clearly that several students after the seminar went back and watched videos that they had not until that point. While causality is hard to perfectly know on this, I would like to infer (as it’s positive to think this way!) that the way I used the case to highlight the learning points in the materials they hadn’t yet engaged with prompted them or engaged them enough to go back and complete what they hadn’t done until that point.

I think this approach works very well with very small group size(n<20) high quality learners on programmes in which the educator has sufficient prep time. Certainly the flipped approach to seminars, and small chunking approach to the pre-seminar content, that was already being used for the class seemed to gel well with the analytics to adapt the content. I think that the core of the pre-seminar learning being within the videos also helped. In flipped seminars in which students are digesting their pre-seminar content from a much wider array of resources it may be more difficult to fully rely on just the Panopto (ReCap) analytics.

Interested in finding out more?

Get in touch with Dr David Grundy, Director of Digital Education.