Dr Nick Riches, Senior Lecturer in Speech and Language Pathology

School of Education, Communication and Language Sciences

Faculty of Humanities and Social Sciences

What did you do?

Used Quizzes in Canvas to integrate the principle of test-enhanced learning into online courses. Encouraged students to keep up with the pace of the course and engage with the learning material using Canvas Quizzes linked to weekly delivery.

Who is involved?

BSc and MSc Students studying Speech and Language Pathology.

How did you do it?

Quizzes are created in Canvas to check the learning on a particular topic, linked to a lecture. They sit within a Canvas module with their related materials. In the MSc courses these quizzes are purely formative, while in the BSc courses these carry a small number of credits to boost engagement.

The question types selected allow the quizzes to be marked automatically, which saves a lot of time. It also allows students to get feedback based on their answers. The quizzes were set up using the Quizzes tool in Canvas. The questions used a variety of formats, mainly multiple-choice questions (MCQ) format with high-quality incorrect answers (distractors), and fill-in-the-gap (cloze) questions. The latter were primarily used for concept checking questions (CCQs) i.e. determining whether key concepts had been understood.

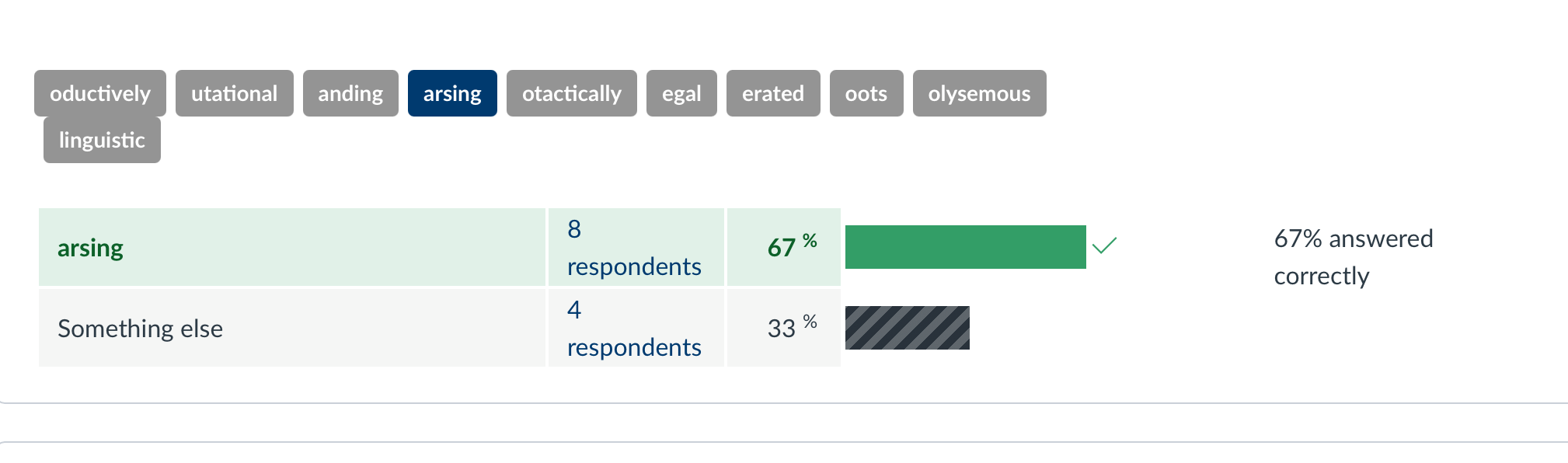

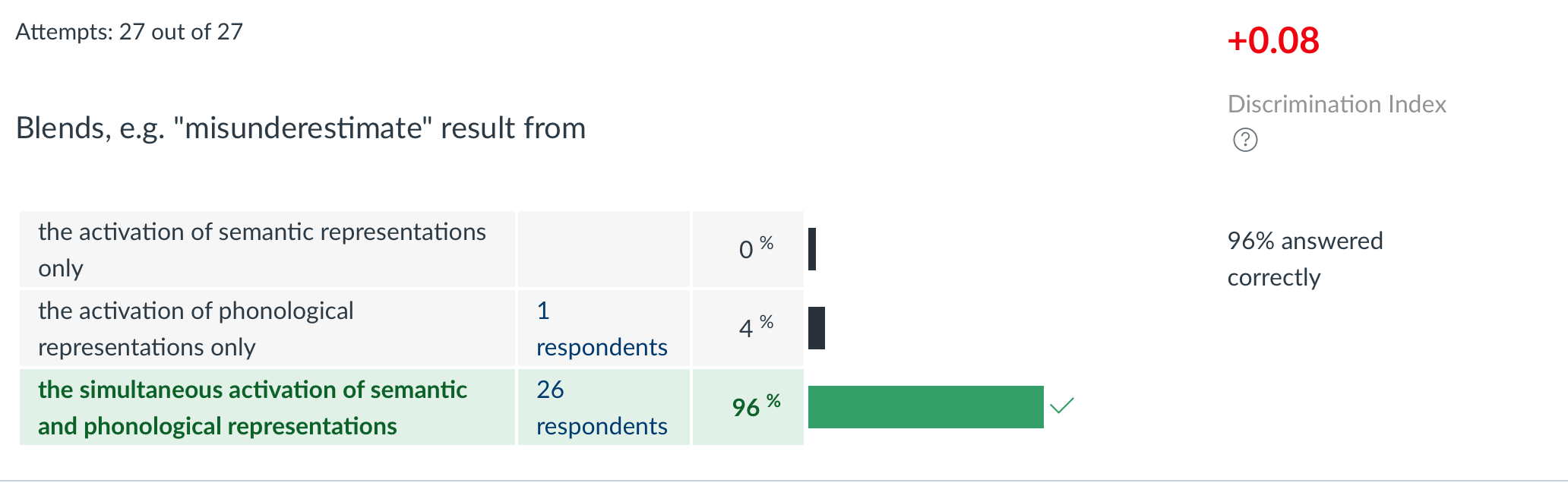

The examples below show these two types of question. The screenshots below are taken from the ‘quiz statistics’ view, which shows how students responded. The following examples show that automatically-marked quizzes can be used to test more than simple recall, moving up Bloom’s Taxonomy (Bloom, 1956) from remembering, to understanding, to applying knowledge.

Testing knowledge and definitions

This cloze question was designed to test students’ understanding of complex terminology. The question was “according to theories of morpho-phonological p[arsing] we actively seek out phonetic material which could potentially be a regular affix.” As we can see, 67% of students gave the correct answer.

This multiple-choice question was designed to test whether students understand how lexical “blends”, such as “misunderestimate” arise in terms of psycholinguistic processes.

According to principles of test-enhanced learning the more actively one retrieves information the better one retains it. This means that open-ended questions are more effective than multiple choice questions (Kang, McDermott & Roediger, 2007). However, obviously a mix of different question types is desirable for practical reasons. For example, it would be difficult to devise a cloze question to elicit understanding of blends.

Why did you do it?

This practice is informed by Test-Enhanced Learning (Roediger & Karpicke, 2006). This approach recognises that having students access their knowledge – by being tested on it – helps to embed the learning beyond the short-term. This approach has been embedded in these courses successfully in previous years using Blackboard tools. Canvas Quizzes work well to enable the application of this methodology in the new online environment.

Does it work?

In the BSc courses these quizzes are seeing a high level of engagement (there are 36 students in the class), even though it is still early in the course. The quizzes are acting as checkpoints for teachers and students, to make sure the learning is tackled at a reasonable pace and that students are not being left behind.

The analytical tools available provide added insight given that teachers cannot currently monitor students in the same way they would in Present in Person teaching. Quiz analytics show which items are handled well and which items are not handled well. This gives detailed insight about which areas of knowledge have been best understood, and which areas may need clarification. This clarification can be added as automatic quiz feedback or addressed elsewhere. This does not replace in-classroom monitoring but does allow for detailed analysis given that individual quizzes and items can be analysed in more depth in the teacher’s own time to inform future teaching.

Tips

- Include high-quality distractors, such as common misconceptions, along with feedback on why these answers are incorrect.

- Though good quizzes can take time to set up, remember that they can be re-used year after year.

- Play around with quizzes in your sandbox course to explore the different features

- Don’t forget to click ‘save’ as you add each question!

Any Useful Resources?

Canvas Guide on Quizzes: https://community.canvaslms.com/t5/Instructor-Guide/How-do-I-create-a-quiz-with-individual-questions/ta-p/1248

Bloom, B.S. (1956) Taxonomy of educational objectives: the classification of educational goals. [1st ed.]. edn. New York: New York, Longmans, Green.

Brame, C. (2013) Writing Good Multiple Choice Test Questions. Available at: https://cft.vanderbilt.edu/guides-sub-pages/writing-good-multiple-choice-test-questions/

Henry, L.R. and Jeffrey, D.K. (2006) ‘Test-Enhanced Learning: Taking Memory Tests Improves Long-Term Retention’, Psychological science, 17(3), pp. 249-255.

Kang, S. H. K., McDermott, K. B., & III, H. L. R. (2007). Test format and corrective feedback modify the effect of testing on long-term retention. European Journal of Cognitive Psychology, 19(4–5), 528–558. Available at: https://doi.org/10.1080/09541440601056620

Roediger, H. L., & Karpicke, J. D. (2006). The Power of Testing Memory: Basic Research and Implications for Educational Practice. Perspectives on Psychological Science, 1(3), 181–210. Available at: https://doi.org/10.1111/j.1745-6916.2006.00012.x